Prompt Engineering for Technical Documentation Automation in VS Code with GitHub Copilot

In the era of AI-assisted development, generating accurate, consistent, and maintainable documentation has become critical. Developers often face time constraints or style inconsistencies when writing docs manually. By integrating prompt engineering strategies with VS Code and GitHub Copilot, it’s now possible to automate documentation generation at scale without sacrificing quality. This article explains how to build effective prompt workflows, adopt Copilot tools, and maintain high documentation standards.

How Prompt Engineering Enhances Documentation in Coding Environments

Prompt engineering is a methodology where developers craft precise queries or instructions to AI systems to produce consistent, targeted results. When applied to documentation, it enables the generation of function explanations, module descriptions, and usage examples with minimal manual effort. The result is reduced documentation debt and faster onboarding for new team members.

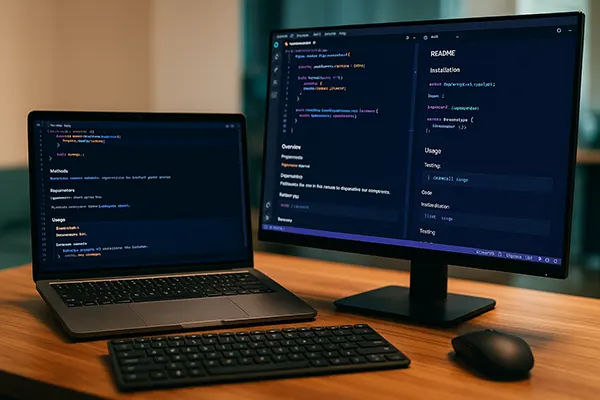

In Visual Studio Code (VS Code), prompt engineering can be implemented via GitHub Copilot extensions. By embedding structured comments or invoking prompts directly while coding, developers can generate accurate summaries or explanations aligned with the project’s purpose. These prompts can be reused across teams, improving consistency in large codebases.

For example, using a prompt like “Write a docstring for this function including input types, expected output, and a usage example” can yield detailed documentation instantly. Developers can iterate on the prompt style to better fit their documentation culture, reducing time spent editing AI output.

Examples of Prompts and Their Use Cases

Here are some prompt templates developers can embed into comments or Copilot commands:

1. For functions: “Generate a Python docstring explaining this function’s purpose, parameters, return type, and edge cases.”

2. For modules: “Summarise the role of this module, its dependencies, and integration with other components.”

3. For README sections: “Draft a README segment describing how to install, configure, and test this module, including CLI commands.”

Using GitHub Copilot Docs and Markdown for Efficient Integration

GitHub Copilot Docs is a powerful tool introduced in 2023 that provides in-editor context for Copilot suggestions. When combined with Markdown structure, it ensures documentation output remains readable and version-controllable. Developers can integrate Copilot Docs with Markdown preview in VS Code, making it easier to visually verify formatting and structure in real-time.

One key advantage of using GitHub Copilot Docs is that it references publicly available examples, providing style guidance without requiring external searches. For instance, when documenting a JavaScript module, Copilot can pull in similar patterns and fill out the README template more intuitively.

Markdown offers structure through headers, code blocks, and lists, which helps organise generated content. By prompting Copilot to format outputs as Markdown, teams maintain documentation compatibility across wikis, GitHub repositories, and static sites.

Improving Linting and Style with Prompt Automation

AI-generated documentation often suffers from inconsistencies in tone, formatting, or language. To mitigate this, developers should integrate linting tools like `markdownlint`, `prettier`, or `vale` into their CI/CD pipeline. These tools enforce documentation standards automatically upon commit.

Prompting Copilot with style guides is another effective approach. For instance: “Generate a JSDoc block using British English, third-person voice, and concise formatting.” This ensures outputs match organisational writing standards.

Teams can also create reusable README templates. Prompt examples such as “Insert a badge section, installation instructions, usage examples, and license details for a TypeScript project” can help Copilot pre-fill skeletons for new projects.

Challenges in Prompt-Driven Documentation and How to Avoid Them

Despite its benefits, prompt-driven documentation isn’t without flaws. One of the most common issues is overgeneration — the AI might add redundant or speculative content that bloats the documentation unnecessarily. This happens when prompts are too vague or not scoped correctly to the function/module’s context.

Another issue is hallucinated content, where Copilot invents parameters or behaviours not present in the actual code. This can mislead developers and introduce documentation errors, especially in security-critical codebases. Validating AI output is essential before merging any auto-generated docs.

Moreover, repeated prompts across similar modules may result in duplicated wording or boilerplate. This undermines readability and hurts the perceived quality of the docs. Variation in prompt phrasing and periodic manual reviews can help alleviate this.

Recommendations to Maximise Accuracy and Minimise Risk

1. Always contextualise prompts with inline code comments or direct code references. Avoid abstract instructions without grounding in actual source files.

2. Use short, clear prompts that explicitly define scope (e.g. “Document only the return value and error cases”). Avoid overly broad instructions.

3. Schedule regular documentation audits by human reviewers to catch inaccuracies or repeated phrasing before releasing updates.